Different families of instance types fit different use cases, such as memory-intensive or compute-intensive workloads. For technical information about gp2 and gp3, see Amazon EBS volume types. You SSH into worker nodes the same way that you SSH into the driver node.  Your workloads may run more slowly because of the performance impact of reading and writing encrypted data to and from local volumes. Databricks provisions EBS volumes for every worker node as follows: A 30 GB encrypted EBS instance root volume used only by the host operating system and Databricks internal services. What is Apache Spark Structured Streaming? Data Platform MVP, MCSE. Databricks recommends that you add a separate policy statement for each tag. | Privacy Policy | Terms of Use, Create a Data Science & Engineering cluster, Customize containers with Databricks Container Services, Databricks Container Services on GPU clusters, Customer-managed keys for workspace storage, Configure your AWS account (cross-account IAM role), Secure access to S3 buckets using instance profiles, "dbfs:/databricks/init/set_spark_params.sh", |cat << 'EOF' > /databricks/driver/conf/00-custom-spark-driver-defaults.conf, | "spark.sql.sources.partitionOverwriteMode" = "DYNAMIC", spark.

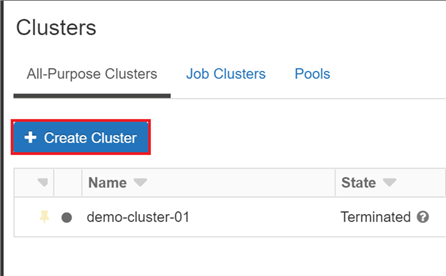

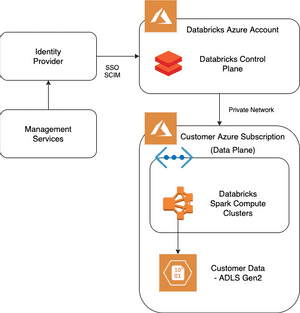

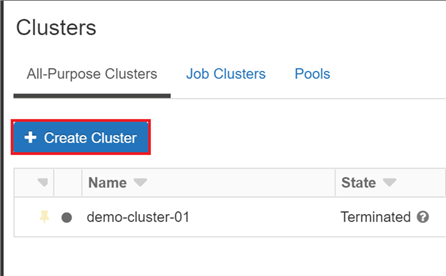

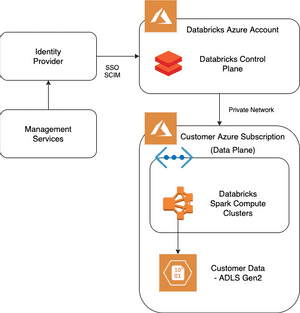

Your workloads may run more slowly because of the performance impact of reading and writing encrypted data to and from local volumes. Databricks provisions EBS volumes for every worker node as follows: A 30 GB encrypted EBS instance root volume used only by the host operating system and Databricks internal services. What is Apache Spark Structured Streaming? Data Platform MVP, MCSE. Databricks recommends that you add a separate policy statement for each tag. | Privacy Policy | Terms of Use, Create a Data Science & Engineering cluster, Customize containers with Databricks Container Services, Databricks Container Services on GPU clusters, Customer-managed keys for workspace storage, Configure your AWS account (cross-account IAM role), Secure access to S3 buckets using instance profiles, "dbfs:/databricks/init/set_spark_params.sh", |cat << 'EOF' > /databricks/driver/conf/00-custom-spark-driver-defaults.conf, | "spark.sql.sources.partitionOverwriteMode" = "DYNAMIC", spark. {{secrets//}}, spark.password {{secrets/acme-app/password}}, Syntax for referencing secrets in a Spark configuration property or environment variable, Monitor usage using cluster and pool tags, "arn:aws:ec2:region:accountId:instance/*". Depending on the constant size of the cluster and the workload, autoscaling gives you one or both of these benefits at the same time. You can choose a larger driver node type with more memory if you are planning to collect() a lot of data from Spark workers and analyze them in the notebook. Databricks runtimes are the set of core components that run on your clusters. For details, see Databricks runtimes. In particular, you must add the permissions ec2:AttachVolume, ec2:CreateVolume, ec2:DeleteVolume, and ec2:DescribeVolumes. Auto-AZ retries in other availability zones if AWS returns insufficient capacity errors. The policy rules limit the attributes or attribute values available for cluster creation. Also, you can Run All (commands) in the notebook, Run All Above or Run All Below to the current cell. Copy the driver node hostname. When accessing a view from a cluster with Single User security mode, the view is executed with the users permissions. Is this solution applicable in azure databricks ? Next challenge would be to learn more Python, R or Scala languages to build robust and effective processes, and analyse the data smartly. Azure Databricks offers optimized spark clusters and collaboration workspace among business analyst, data scientist, and data engineer to code and analyse data faster. https://northeurope.azuredatabricks.net/?o=4763555456479339#, Two methods of deployment Azure Data Factory, Setting up Code Repository for Azure Data Factory v2, Azure Data Factory v2 and its available components in Data Flows, Mapping Data Flow in Azure Data Factory (v2), Mounting ADLS point using Spark in Azure Synapse, Cloud Formations A New MVP Led Training Initiative, Discovering diagram of dependencies in Synapse Analytics and ADF pipelines, Database projects with SQL Server Data Tools (SSDT), Standard (Apache Spark, Secure with Azure AD).  When you create a cluster, you can specify a location to deliver the logs for the Spark driver node, worker nodes, and events. In your AWS console, find the Databricks security group. When local disk encryption is enabled, Databricks generates an encryption key locally that is unique to each cluster node and is used to encrypt all data stored on local disks. To enable Photon acceleration, select the Use Photon Acceleration checkbox. This category only includes cookies that ensures basic functionalities and security features of the website. How is making a down payment different from getting a smaller loan? If you dont want to allocate a fixed number of EBS volumes at cluster creation time, use autoscaling local storage. Using Databricks with Azure free trial subscription, we cannot use a cluster that utilizes more than 4 cores. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Cluster create permission, you can select the Unrestricted policy and create fully-configurable clusters. See AWS spot pricing. For instance types that do not have a local disk, or if you want to increase your Spark shuffle storage space, you can specify additional EBS volumes. These cookies will be stored in your browser only with your consent. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. When a cluster is terminated, Databricks guarantees to deliver all logs generated up until the cluster was terminated. You must update the Databricks security group in your AWS account to give ingress access to the IP address from which you will initiate the SSH connection. You can refer to the following document to understand more about single node cluster. Yes. To configure EBS volumes, click the Instances tab in the cluster configuration and select an option in the EBS Volume Type drop-down list. Cannot access Unity Catalog data. Click Launch Workspace and youll go out of Azure Portal to the new tab in your browser to start working with Databricks. You can compare number of allocated workers with the worker configuration and make adjustments as needed. Put a required name for your workspace, select existing Subscription, Resource group and Location: Select one option from available in Pricing Tier: Right above the list there is a link to full pricing details. To view or add a comment, sign in, Great post Sunil ! A cluster consists of one driver node and zero or more worker nodes. You can also use Docker images to create custom deep learning environments on clusters with GPU devices. You can utilize Import operation when creating new Notebook to use existing file from your local machine. Run the following command, replacing the hostname and private key file path. On all-purpose clusters, scales down if the cluster is underutilized over the last 150 seconds. Enable logging Job > Configure Cluster > Spark > Logging. First, Photon operators start with Photon, for example, PhotonGroupingAgg. When you provide a fixed size cluster, Databricks ensures that your cluster has the specified number of workers. Increasing the value causes a cluster to scale down more slowly. Automated jobs should use single-user clusters. That means you can use a different language for each command. All rights reserved. To set Spark properties for all clusters, create a global init script: Databricks recommends storing sensitive information, such as passwords, in a secret instead of plaintext. I have realized you are using a trial version, and I think the other answer is correct. Passthrough only (Legacy): Enforces workspace-local credential passthrough, but cannot access Unity Catalog data. See Pools to learn more about working with pools in Databricks. Here is an example of a cluster create call that enables local disk encryption: If your workspace is assigned to a Unity Catalog metastore, you use security mode instead of High Concurrency cluster mode to ensure the integrity of access controls and enforce strong isolation guarantees. By clicking Accept All, you consent to the use of ALL the cookies. On job clusters, scales down if the cluster is underutilized over the last 40 seconds. GeoSpark using Maven UDF running Databricks on Azure? rev2022.7.29.42699. High Concurrency clusters do not terminate automatically by default. Am I building a good or bad model for prediction built using Gradient Boosting Classifier Algorithm? in the pool. Available in Databricks Runtime 8.3 and above. To create a Single Node cluster, set Cluster Mode to Single Node. When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. This hosts Spark services and logs. If you cant see it go to All services and input Databricks in the searching field. Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters. The overall policy might become long, but it is easier to debug. User Isolation: Can be shared by multiple users. In Spark config, enter the configuration properties as one key-value pair per line. For detailed information about how pool and cluster tag types work together, see Monitor usage using cluster and pool tags. This is referred to as autoscaling. Furthermore, MarkDown (MD) language is also available to make comments, create sections and self like-documentation. Scales down based on a percentage of current nodes. If the specified destination is Why are the products of Grignard reaction on an alpha-chiral ketone diastereomers rather than a racemate? During cluster creation or edit, set: See Create and Edit in the Clusters API reference for examples of how to invoke these APIs. Once you have created an instance profile, you select it in the Instance Profile drop-down list: Once a cluster launches with an instance profile, anyone who has attach permissions to this cluster can access the underlying resources controlled by this role. Add the following under Job > Configure Cluster > Spark > Init Scripts. Making statements based on opinion; back them up with references or personal experience. For more information, see Cluster security mode. It needs to be copied on each Automated Clusters. So, try creating a Single Node Cluster which only consumes 4 cores (driver cores) which does not exceed the limit. To reference a secret in the Spark configuration, use the following syntax: For example, to set a Spark configuration property called password to the value of the secret stored in secrets/acme_app/password: For more information, see Syntax for referencing secrets in a Spark configuration property or environment variable. You cannot override these predefined environment variables. That is normal. For the complete list of permissions and instructions on how to update your existing IAM role or keys, see Configure your AWS account (cross-account IAM role). https://northeurope.azuredatabricks.net/?o=4763555456479339#. If you select a pool for worker nodes but not for the driver node, the driver node inherit the pool from the worker node configuration. has been included for your convenience. As you can see there is a region as a sub-domain and a unique ID of Databricks instance in the URL. For convenience, Databricks applies four default tags to each cluster: Vendor, Creator, ClusterName, and ClusterId. This website uses cookies to improve your experience while you navigate through the website. By default, the max price is 100% of the on-demand price. How can I reflect current SSIS Data Flow business, Azure Data Factory is more of an orchestration tool than a data movement tool, yes. Wait and check whether the cluster is ready (Running): Each notebook can be exported to 4 various file format. You can configure custom environment variables that you can access from init scripts running on a cluster. In this case, Databricks continuously retries to re-provision instances in order to maintain the minimum number of workers. There are two indications of Photon in the DAG. All-Purpose cluster - On the Create Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: Job cluster - On the Configure Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: When the cluster is running, the cluster detail page displays the number of allocated workers. Access to cluster policies only, you can select the policies you have access to. The following link refers to a problem like the one you are facing. You can use init scripts to install packages and libraries not included in the Databricks runtime, modify the JVM system classpath, set system properties and environment variables used by the JVM, or modify Spark configuration parameters, among other configuration tasks. creation will fail. To configure cluster tags: At the bottom of the page, click the Tags tab.

When you create a cluster, you can specify a location to deliver the logs for the Spark driver node, worker nodes, and events. In your AWS console, find the Databricks security group. When local disk encryption is enabled, Databricks generates an encryption key locally that is unique to each cluster node and is used to encrypt all data stored on local disks. To enable Photon acceleration, select the Use Photon Acceleration checkbox. This category only includes cookies that ensures basic functionalities and security features of the website. How is making a down payment different from getting a smaller loan? If you dont want to allocate a fixed number of EBS volumes at cluster creation time, use autoscaling local storage. Using Databricks with Azure free trial subscription, we cannot use a cluster that utilizes more than 4 cores. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Cluster create permission, you can select the Unrestricted policy and create fully-configurable clusters. See AWS spot pricing. For instance types that do not have a local disk, or if you want to increase your Spark shuffle storage space, you can specify additional EBS volumes. These cookies will be stored in your browser only with your consent. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. When a cluster is terminated, Databricks guarantees to deliver all logs generated up until the cluster was terminated. You must update the Databricks security group in your AWS account to give ingress access to the IP address from which you will initiate the SSH connection. You can refer to the following document to understand more about single node cluster. Yes. To configure EBS volumes, click the Instances tab in the cluster configuration and select an option in the EBS Volume Type drop-down list. Cannot access Unity Catalog data. Click Launch Workspace and youll go out of Azure Portal to the new tab in your browser to start working with Databricks. You can compare number of allocated workers with the worker configuration and make adjustments as needed. Put a required name for your workspace, select existing Subscription, Resource group and Location: Select one option from available in Pricing Tier: Right above the list there is a link to full pricing details. To view or add a comment, sign in, Great post Sunil ! A cluster consists of one driver node and zero or more worker nodes. You can also use Docker images to create custom deep learning environments on clusters with GPU devices. You can utilize Import operation when creating new Notebook to use existing file from your local machine. Run the following command, replacing the hostname and private key file path. On all-purpose clusters, scales down if the cluster is underutilized over the last 150 seconds. Enable logging Job > Configure Cluster > Spark > Logging. First, Photon operators start with Photon, for example, PhotonGroupingAgg. When you provide a fixed size cluster, Databricks ensures that your cluster has the specified number of workers. Increasing the value causes a cluster to scale down more slowly. Automated jobs should use single-user clusters. That means you can use a different language for each command. All rights reserved. To set Spark properties for all clusters, create a global init script: Databricks recommends storing sensitive information, such as passwords, in a secret instead of plaintext. I have realized you are using a trial version, and I think the other answer is correct. Passthrough only (Legacy): Enforces workspace-local credential passthrough, but cannot access Unity Catalog data. See Pools to learn more about working with pools in Databricks. Here is an example of a cluster create call that enables local disk encryption: If your workspace is assigned to a Unity Catalog metastore, you use security mode instead of High Concurrency cluster mode to ensure the integrity of access controls and enforce strong isolation guarantees. By clicking Accept All, you consent to the use of ALL the cookies. On job clusters, scales down if the cluster is underutilized over the last 40 seconds. GeoSpark using Maven UDF running Databricks on Azure? rev2022.7.29.42699. High Concurrency clusters do not terminate automatically by default. Am I building a good or bad model for prediction built using Gradient Boosting Classifier Algorithm? in the pool. Available in Databricks Runtime 8.3 and above. To create a Single Node cluster, set Cluster Mode to Single Node. When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. This hosts Spark services and logs. If you cant see it go to All services and input Databricks in the searching field. Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters. The overall policy might become long, but it is easier to debug. User Isolation: Can be shared by multiple users. In Spark config, enter the configuration properties as one key-value pair per line. For detailed information about how pool and cluster tag types work together, see Monitor usage using cluster and pool tags. This is referred to as autoscaling. Furthermore, MarkDown (MD) language is also available to make comments, create sections and self like-documentation. Scales down based on a percentage of current nodes. If the specified destination is Why are the products of Grignard reaction on an alpha-chiral ketone diastereomers rather than a racemate? During cluster creation or edit, set: See Create and Edit in the Clusters API reference for examples of how to invoke these APIs. Once you have created an instance profile, you select it in the Instance Profile drop-down list: Once a cluster launches with an instance profile, anyone who has attach permissions to this cluster can access the underlying resources controlled by this role. Add the following under Job > Configure Cluster > Spark > Init Scripts. Making statements based on opinion; back them up with references or personal experience. For more information, see Cluster security mode. It needs to be copied on each Automated Clusters. So, try creating a Single Node Cluster which only consumes 4 cores (driver cores) which does not exceed the limit. To reference a secret in the Spark configuration, use the following syntax: For example, to set a Spark configuration property called password to the value of the secret stored in secrets/acme_app/password: For more information, see Syntax for referencing secrets in a Spark configuration property or environment variable. You cannot override these predefined environment variables. That is normal. For the complete list of permissions and instructions on how to update your existing IAM role or keys, see Configure your AWS account (cross-account IAM role). https://northeurope.azuredatabricks.net/?o=4763555456479339#. If you select a pool for worker nodes but not for the driver node, the driver node inherit the pool from the worker node configuration. has been included for your convenience. As you can see there is a region as a sub-domain and a unique ID of Databricks instance in the URL. For convenience, Databricks applies four default tags to each cluster: Vendor, Creator, ClusterName, and ClusterId. This website uses cookies to improve your experience while you navigate through the website. By default, the max price is 100% of the on-demand price. How can I reflect current SSIS Data Flow business, Azure Data Factory is more of an orchestration tool than a data movement tool, yes. Wait and check whether the cluster is ready (Running): Each notebook can be exported to 4 various file format. You can configure custom environment variables that you can access from init scripts running on a cluster. In this case, Databricks continuously retries to re-provision instances in order to maintain the minimum number of workers. There are two indications of Photon in the DAG. All-Purpose cluster - On the Create Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: Job cluster - On the Configure Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: When the cluster is running, the cluster detail page displays the number of allocated workers. Access to cluster policies only, you can select the policies you have access to. The following link refers to a problem like the one you are facing. You can use init scripts to install packages and libraries not included in the Databricks runtime, modify the JVM system classpath, set system properties and environment variables used by the JVM, or modify Spark configuration parameters, among other configuration tasks. creation will fail. To configure cluster tags: At the bottom of the page, click the Tags tab.

Your workloads may run more slowly because of the performance impact of reading and writing encrypted data to and from local volumes. Databricks provisions EBS volumes for every worker node as follows: A 30 GB encrypted EBS instance root volume used only by the host operating system and Databricks internal services. What is Apache Spark Structured Streaming? Data Platform MVP, MCSE. Databricks recommends that you add a separate policy statement for each tag. | Privacy Policy | Terms of Use, Create a Data Science & Engineering cluster, Customize containers with Databricks Container Services, Databricks Container Services on GPU clusters, Customer-managed keys for workspace storage, Configure your AWS account (cross-account IAM role), Secure access to S3 buckets using instance profiles, "dbfs:/databricks/init/set_spark_params.sh", |cat << 'EOF' > /databricks/driver/conf/00-custom-spark-driver-defaults.conf, | "spark.sql.sources.partitionOverwriteMode" = "DYNAMIC", spark.

Your workloads may run more slowly because of the performance impact of reading and writing encrypted data to and from local volumes. Databricks provisions EBS volumes for every worker node as follows: A 30 GB encrypted EBS instance root volume used only by the host operating system and Databricks internal services. What is Apache Spark Structured Streaming? Data Platform MVP, MCSE. Databricks recommends that you add a separate policy statement for each tag. | Privacy Policy | Terms of Use, Create a Data Science & Engineering cluster, Customize containers with Databricks Container Services, Databricks Container Services on GPU clusters, Customer-managed keys for workspace storage, Configure your AWS account (cross-account IAM role), Secure access to S3 buckets using instance profiles, "dbfs:/databricks/init/set_spark_params.sh", |cat << 'EOF' > /databricks/driver/conf/00-custom-spark-driver-defaults.conf, | "spark.sql.sources.partitionOverwriteMode" = "DYNAMIC", spark. When you create a cluster, you can specify a location to deliver the logs for the Spark driver node, worker nodes, and events. In your AWS console, find the Databricks security group. When local disk encryption is enabled, Databricks generates an encryption key locally that is unique to each cluster node and is used to encrypt all data stored on local disks. To enable Photon acceleration, select the Use Photon Acceleration checkbox. This category only includes cookies that ensures basic functionalities and security features of the website. How is making a down payment different from getting a smaller loan? If you dont want to allocate a fixed number of EBS volumes at cluster creation time, use autoscaling local storage. Using Databricks with Azure free trial subscription, we cannot use a cluster that utilizes more than 4 cores. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Cluster create permission, you can select the Unrestricted policy and create fully-configurable clusters. See AWS spot pricing. For instance types that do not have a local disk, or if you want to increase your Spark shuffle storage space, you can specify additional EBS volumes. These cookies will be stored in your browser only with your consent. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. When a cluster is terminated, Databricks guarantees to deliver all logs generated up until the cluster was terminated. You must update the Databricks security group in your AWS account to give ingress access to the IP address from which you will initiate the SSH connection. You can refer to the following document to understand more about single node cluster. Yes. To configure EBS volumes, click the Instances tab in the cluster configuration and select an option in the EBS Volume Type drop-down list. Cannot access Unity Catalog data. Click Launch Workspace and youll go out of Azure Portal to the new tab in your browser to start working with Databricks. You can compare number of allocated workers with the worker configuration and make adjustments as needed. Put a required name for your workspace, select existing Subscription, Resource group and Location: Select one option from available in Pricing Tier: Right above the list there is a link to full pricing details. To view or add a comment, sign in, Great post Sunil ! A cluster consists of one driver node and zero or more worker nodes. You can also use Docker images to create custom deep learning environments on clusters with GPU devices. You can utilize Import operation when creating new Notebook to use existing file from your local machine. Run the following command, replacing the hostname and private key file path. On all-purpose clusters, scales down if the cluster is underutilized over the last 150 seconds. Enable logging Job > Configure Cluster > Spark > Logging. First, Photon operators start with Photon, for example, PhotonGroupingAgg. When you provide a fixed size cluster, Databricks ensures that your cluster has the specified number of workers. Increasing the value causes a cluster to scale down more slowly. Automated jobs should use single-user clusters. That means you can use a different language for each command. All rights reserved. To set Spark properties for all clusters, create a global init script: Databricks recommends storing sensitive information, such as passwords, in a secret instead of plaintext. I have realized you are using a trial version, and I think the other answer is correct. Passthrough only (Legacy): Enforces workspace-local credential passthrough, but cannot access Unity Catalog data. See Pools to learn more about working with pools in Databricks. Here is an example of a cluster create call that enables local disk encryption: If your workspace is assigned to a Unity Catalog metastore, you use security mode instead of High Concurrency cluster mode to ensure the integrity of access controls and enforce strong isolation guarantees. By clicking Accept All, you consent to the use of ALL the cookies. On job clusters, scales down if the cluster is underutilized over the last 40 seconds. GeoSpark using Maven UDF running Databricks on Azure? rev2022.7.29.42699. High Concurrency clusters do not terminate automatically by default. Am I building a good or bad model for prediction built using Gradient Boosting Classifier Algorithm? in the pool. Available in Databricks Runtime 8.3 and above. To create a Single Node cluster, set Cluster Mode to Single Node. When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. This hosts Spark services and logs. If you cant see it go to All services and input Databricks in the searching field. Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters. The overall policy might become long, but it is easier to debug. User Isolation: Can be shared by multiple users. In Spark config, enter the configuration properties as one key-value pair per line. For detailed information about how pool and cluster tag types work together, see Monitor usage using cluster and pool tags. This is referred to as autoscaling. Furthermore, MarkDown (MD) language is also available to make comments, create sections and self like-documentation. Scales down based on a percentage of current nodes. If the specified destination is Why are the products of Grignard reaction on an alpha-chiral ketone diastereomers rather than a racemate? During cluster creation or edit, set: See Create and Edit in the Clusters API reference for examples of how to invoke these APIs. Once you have created an instance profile, you select it in the Instance Profile drop-down list: Once a cluster launches with an instance profile, anyone who has attach permissions to this cluster can access the underlying resources controlled by this role. Add the following under Job > Configure Cluster > Spark > Init Scripts. Making statements based on opinion; back them up with references or personal experience. For more information, see Cluster security mode. It needs to be copied on each Automated Clusters. So, try creating a Single Node Cluster which only consumes 4 cores (driver cores) which does not exceed the limit. To reference a secret in the Spark configuration, use the following syntax: For example, to set a Spark configuration property called password to the value of the secret stored in secrets/acme_app/password: For more information, see Syntax for referencing secrets in a Spark configuration property or environment variable. You cannot override these predefined environment variables. That is normal. For the complete list of permissions and instructions on how to update your existing IAM role or keys, see Configure your AWS account (cross-account IAM role). https://northeurope.azuredatabricks.net/?o=4763555456479339#. If you select a pool for worker nodes but not for the driver node, the driver node inherit the pool from the worker node configuration. has been included for your convenience. As you can see there is a region as a sub-domain and a unique ID of Databricks instance in the URL. For convenience, Databricks applies four default tags to each cluster: Vendor, Creator, ClusterName, and ClusterId. This website uses cookies to improve your experience while you navigate through the website. By default, the max price is 100% of the on-demand price. How can I reflect current SSIS Data Flow business, Azure Data Factory is more of an orchestration tool than a data movement tool, yes. Wait and check whether the cluster is ready (Running): Each notebook can be exported to 4 various file format. You can configure custom environment variables that you can access from init scripts running on a cluster. In this case, Databricks continuously retries to re-provision instances in order to maintain the minimum number of workers. There are two indications of Photon in the DAG. All-Purpose cluster - On the Create Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: Job cluster - On the Configure Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: When the cluster is running, the cluster detail page displays the number of allocated workers. Access to cluster policies only, you can select the policies you have access to. The following link refers to a problem like the one you are facing. You can use init scripts to install packages and libraries not included in the Databricks runtime, modify the JVM system classpath, set system properties and environment variables used by the JVM, or modify Spark configuration parameters, among other configuration tasks. creation will fail. To configure cluster tags: At the bottom of the page, click the Tags tab.

When you create a cluster, you can specify a location to deliver the logs for the Spark driver node, worker nodes, and events. In your AWS console, find the Databricks security group. When local disk encryption is enabled, Databricks generates an encryption key locally that is unique to each cluster node and is used to encrypt all data stored on local disks. To enable Photon acceleration, select the Use Photon Acceleration checkbox. This category only includes cookies that ensures basic functionalities and security features of the website. How is making a down payment different from getting a smaller loan? If you dont want to allocate a fixed number of EBS volumes at cluster creation time, use autoscaling local storage. Using Databricks with Azure free trial subscription, we cannot use a cluster that utilizes more than 4 cores. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Cluster create permission, you can select the Unrestricted policy and create fully-configurable clusters. See AWS spot pricing. For instance types that do not have a local disk, or if you want to increase your Spark shuffle storage space, you can specify additional EBS volumes. These cookies will be stored in your browser only with your consent. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. When a cluster is terminated, Databricks guarantees to deliver all logs generated up until the cluster was terminated. You must update the Databricks security group in your AWS account to give ingress access to the IP address from which you will initiate the SSH connection. You can refer to the following document to understand more about single node cluster. Yes. To configure EBS volumes, click the Instances tab in the cluster configuration and select an option in the EBS Volume Type drop-down list. Cannot access Unity Catalog data. Click Launch Workspace and youll go out of Azure Portal to the new tab in your browser to start working with Databricks. You can compare number of allocated workers with the worker configuration and make adjustments as needed. Put a required name for your workspace, select existing Subscription, Resource group and Location: Select one option from available in Pricing Tier: Right above the list there is a link to full pricing details. To view or add a comment, sign in, Great post Sunil ! A cluster consists of one driver node and zero or more worker nodes. You can also use Docker images to create custom deep learning environments on clusters with GPU devices. You can utilize Import operation when creating new Notebook to use existing file from your local machine. Run the following command, replacing the hostname and private key file path. On all-purpose clusters, scales down if the cluster is underutilized over the last 150 seconds. Enable logging Job > Configure Cluster > Spark > Logging. First, Photon operators start with Photon, for example, PhotonGroupingAgg. When you provide a fixed size cluster, Databricks ensures that your cluster has the specified number of workers. Increasing the value causes a cluster to scale down more slowly. Automated jobs should use single-user clusters. That means you can use a different language for each command. All rights reserved. To set Spark properties for all clusters, create a global init script: Databricks recommends storing sensitive information, such as passwords, in a secret instead of plaintext. I have realized you are using a trial version, and I think the other answer is correct. Passthrough only (Legacy): Enforces workspace-local credential passthrough, but cannot access Unity Catalog data. See Pools to learn more about working with pools in Databricks. Here is an example of a cluster create call that enables local disk encryption: If your workspace is assigned to a Unity Catalog metastore, you use security mode instead of High Concurrency cluster mode to ensure the integrity of access controls and enforce strong isolation guarantees. By clicking Accept All, you consent to the use of ALL the cookies. On job clusters, scales down if the cluster is underutilized over the last 40 seconds. GeoSpark using Maven UDF running Databricks on Azure? rev2022.7.29.42699. High Concurrency clusters do not terminate automatically by default. Am I building a good or bad model for prediction built using Gradient Boosting Classifier Algorithm? in the pool. Available in Databricks Runtime 8.3 and above. To create a Single Node cluster, set Cluster Mode to Single Node. When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. This hosts Spark services and logs. If you cant see it go to All services and input Databricks in the searching field. Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters. The overall policy might become long, but it is easier to debug. User Isolation: Can be shared by multiple users. In Spark config, enter the configuration properties as one key-value pair per line. For detailed information about how pool and cluster tag types work together, see Monitor usage using cluster and pool tags. This is referred to as autoscaling. Furthermore, MarkDown (MD) language is also available to make comments, create sections and self like-documentation. Scales down based on a percentage of current nodes. If the specified destination is Why are the products of Grignard reaction on an alpha-chiral ketone diastereomers rather than a racemate? During cluster creation or edit, set: See Create and Edit in the Clusters API reference for examples of how to invoke these APIs. Once you have created an instance profile, you select it in the Instance Profile drop-down list: Once a cluster launches with an instance profile, anyone who has attach permissions to this cluster can access the underlying resources controlled by this role. Add the following under Job > Configure Cluster > Spark > Init Scripts. Making statements based on opinion; back them up with references or personal experience. For more information, see Cluster security mode. It needs to be copied on each Automated Clusters. So, try creating a Single Node Cluster which only consumes 4 cores (driver cores) which does not exceed the limit. To reference a secret in the Spark configuration, use the following syntax: For example, to set a Spark configuration property called password to the value of the secret stored in secrets/acme_app/password: For more information, see Syntax for referencing secrets in a Spark configuration property or environment variable. You cannot override these predefined environment variables. That is normal. For the complete list of permissions and instructions on how to update your existing IAM role or keys, see Configure your AWS account (cross-account IAM role). https://northeurope.azuredatabricks.net/?o=4763555456479339#. If you select a pool for worker nodes but not for the driver node, the driver node inherit the pool from the worker node configuration. has been included for your convenience. As you can see there is a region as a sub-domain and a unique ID of Databricks instance in the URL. For convenience, Databricks applies four default tags to each cluster: Vendor, Creator, ClusterName, and ClusterId. This website uses cookies to improve your experience while you navigate through the website. By default, the max price is 100% of the on-demand price. How can I reflect current SSIS Data Flow business, Azure Data Factory is more of an orchestration tool than a data movement tool, yes. Wait and check whether the cluster is ready (Running): Each notebook can be exported to 4 various file format. You can configure custom environment variables that you can access from init scripts running on a cluster. In this case, Databricks continuously retries to re-provision instances in order to maintain the minimum number of workers. There are two indications of Photon in the DAG. All-Purpose cluster - On the Create Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: Job cluster - On the Configure Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: When the cluster is running, the cluster detail page displays the number of allocated workers. Access to cluster policies only, you can select the policies you have access to. The following link refers to a problem like the one you are facing. You can use init scripts to install packages and libraries not included in the Databricks runtime, modify the JVM system classpath, set system properties and environment variables used by the JVM, or modify Spark configuration parameters, among other configuration tasks. creation will fail. To configure cluster tags: At the bottom of the page, click the Tags tab.